This dependency can shape our behavior. Typically, people tend to assume that others are telling the truth. This study demonstrated that: even though volunteers knew half of the statements were lies, they marked only 19% of them as lies. But when people chose to use an AI tool, things changed: the blame rate rose to 58%.

In some ways, this is a good thing: these tools can help us spot more of the lies we encounter in our lives, including the misinformation we may encounter on social media.

But it’s not all good. It can also undermine trust, a fundamental aspect of human behavior that helps us build relationships. If the price of accurate judgment is weakened social bonds, is it worth it?

And then there’s the question of accuracy. In their study, von Schenk and her colleagues were interested only in making tools that are better at detecting lies than humans, which isn’t particularly hard to do, given how bad humans are at detecting lies. But she also envisions tools like hers being used routinely to assess the veracity of social media posts, or to look for false details in job applicants’ resumes and interview answers. In these cases, it’s not enough for the technology to be “better than humans” to make more prosecutions.

Would we accept an 80% accuracy rate, where only 4 out of 5 evaluated statements are correctly interpreted as true or false? Would 99% accuracy be good enough? We’re not sure.

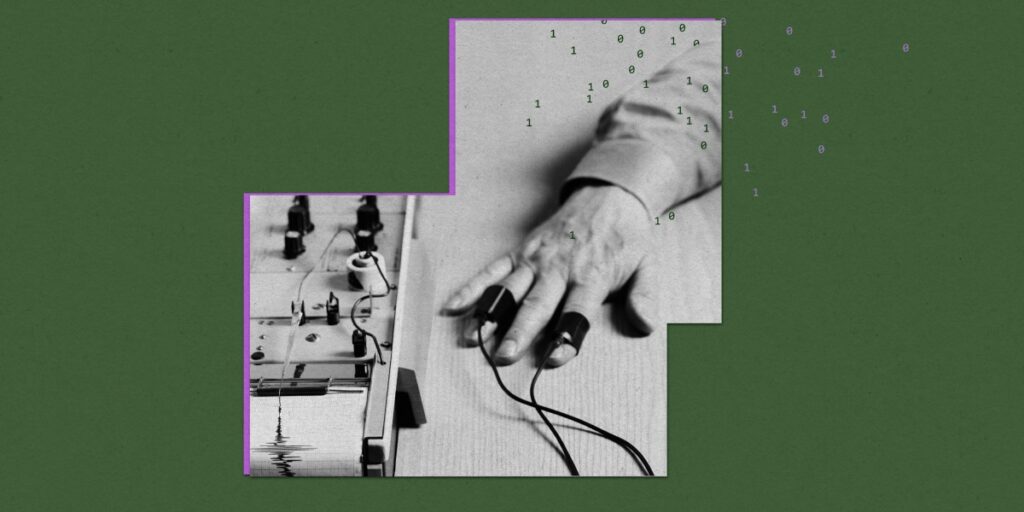

It’s worth remembering how inaccurate lie detector technology has been historically. Polygraphs were designed to measure heart rate and other signs of “arousal” because some of the signs of stress were thought to be characteristic of liars. But they’re not. And we’ve known that for a long time. That’s why lie detector results are generally not admissible in U.S. courts. Nevertheless, polygraph lie detector tests have persisted in some contexts, and have been used to blame people for failing reality TV shows, causing a lot of harm.

Von Schenk said imperfect AI tools could have an even bigger impact because they are easier to scale: There is a limit to how many people a polygraph can test in a day, but the scope of AI lie detection is nearly limitless.

“Given how much fake news and misinformation is being spread, these technologies have merit,” von Schenk says, “but they need to be tested in the wild to make sure they perform significantly better than humans.” If AI lie detectors are generating a lot of criticism, she says, it might be best not to use them at all.

Read more about Check Up

Read more from the MIT Technology Review archives

AI lie detectors are also being developed to look for facial movement patterns and “micro-gestures” when lying. Jake Bittle said, “The dream of the perfect lie detector will never die, especially once it’s camouflaged with the brilliance of AI.”

Meanwhile, AI is also being used wholesale to generate disinformation: as of October last year, generative AI was being used in at least 16 countries to “sow doubt, smear opponents, and influence public debate,” as reported by Tate Ryan-Mosley.

How AI language models are developed can have a major impact on how they behave, and as a result, these models have taken on a variety of political biases, something my colleague Melissa Heikkilä covered in an article last year.

AI, like social media, has the potential for both good and bad. In either case, Nathan E. Sanders and Bruce Schneier argue, the regulatory limitations we place on these technologies will determine which outcome we see.

All chatbot answers are fiction, but there are tools available that give confidence scores to the output of large language models and help users judge their trustworthiness — or, as Will Douglas Heaven put it in an article published a few months ago, the Chatbot BS-o Meter.

From the web

British scientists, ethicists, and legal experts have published new guidelines for research into synthetic embryos, or “stem cell-based embryo models” (SCBEMs), as they call them. They say there should be limits on how long they can be grown in the lab, and that they shouldn’t be implanted into human or animal uteruses. They also say that if it looks like these tissues could one day develop into a fetus, they should stop calling them “models” and start calling them “embryos.”

Antibiotic resistance already causes 700,000 deaths each year and could claim 10 million lives per year by 2050. Overuse of broad-spectrum antibiotics is also a contributing factor. Is it time to tax these drugs to limit demand? (International Journal of Industrial Organization)

Spaceflight may change the human brain, reorganizing grey and white matter and shifting the brain higher in the skull. Before sending humans to Mars, we need to better understand these effects and how space radiation affects the brain. (The Lancet Neurology)

The vagus nerve has become a surprise social media star, thanks to influencers touting the benefits of stimulating it. Unfortunately, the science isn’t quite backing it up. (New Scientist)

A hospital in Texas is set to become the first in the country to allow doctors to examine patients through holograms. Crescent Regional Hospital in Lancaster has installed the Holobox, a system that projects life-sized holograms of doctors for patient consultations. (ABC News)